Chapter 1 – theoryWhat can I use artificial neural networks (ANN) for?ANN are used for pattern recognition. E.g. letter identification (handwritten or printed), voice recognition (to identify the talker or the content), prognoses (stock, weather), analysis of ECG, EEG, X-rays ...

For whom this tutorial is written?This is for beginners. The last exercise in the second chapter needs Java knowledge, but isn't required to be done.

How complicated will it be?To completely understand the functionality of ANN, you actually need advanced mathematical knowledge (if you can operate with gradients, you may have the right state of knowledge). But I don't want this take as a given. I want to provide a minimized mathematical introduction instead, giving you an incitement for self-study.

The first chapter gives you an understanding of the essential functionality.

Exercises with a freeware program and training an artificial neural network for letter recognition by using a java framework follow in chapter two.

ANN are amazing, because you can experiment a lot with them.

What are ANN and what are they used for?Artificial neural networks are based on the natural neural networks like our brain. The brain is a net of nerve cells (= neurons). Neurons in an artificial network are little calculating devices which can be made of hard- or software.

Now you can chip in that a computer works much faster than the brain. So why the heck should we use the brain as a model? Comparing the frequency of a modern computer with the frequency of our brain (100 – 1000 Hz is the neural frequency, which means a neuron can fire again after 10^-3 seconds) this is an absolutely correct statement. No one of us is able to keep up in calculation with a computer. But there are a lot of tasks a brain can accomplish much better. How would an algorithm look like that is able to identify the face of your neighbour? Can you tell from your experience how you recognize someone? And how does it happen with your aunt who recently came from the hairdresser and also has new glasses? Why do you recognize her anyway and how can this be so fast? Why does it work with different light incidences, different perspectives and different facial expressions?

We are not aware of the process. We don't know how we do it, we are just able to. That's why it is so hard to invent an algorithm for it. In addition computers are normally very accurate but not fault-tolerant, whereas it is vice versa with the human being. The secret lies in the parallelism the neurons are working with. Each one of our 10^11 nerve cells has 100 000 to 200 000 connections to other neurons. A huge amount of data can be processed simultaneously. Our computers work mostly sequential. Although there is a delevelopement to more parallelism (multi core processors, ...) it can not keep up to our brains.

Whereas chemical substances play an important role in our brain, those are ignored in artificial neural networks. For simplicity they only use the electrical model.

Just like brains ANN are able to learn.

How does a neuron look like?The most simple model for artificial neurons is the McCulloch-Pitts neuron, named after their inventors Warren McCulloch and Walter Pitts (1943). It is also called Threshold Logic Unit (TLU).

The TLU has the following buildup: There are several inputs and one output. The input values may be 1 or 0. Our neuron only sums up all input values. For instance: if three inputs have the value 1 and two inputs have the value 0, the neuron will calculate the total of 3. If this sum overruns a special threshold, the neuron will fire which means the output will show 1. Otherwise the output is 0.

e.g.: A neuron has five inputs and the threshold is 3. If only two inputs have the value 1, the neuron won't fire. It needs at least three running inputs to fire.

Now this neuron shall be able to learn. Therefor weights are put to the inputs. The weight predicates how powerful this input is. Weights may be real numbers. They are multiplied with their input. Afterwards the sum will be computed. The higher the weight, the more the input effects the sum.

This is related to the functionality of natural nerve cells. The nerve fibers are of variable thickness. The thicker they are the more powerful are their signals to other nerve cells and the more they have an impact on them. While learning the thickness is adjusted. Nerve fibers which are seldom used may degenerate whereas the often used ones became even stronger. This means for our artificial cell, that a higher weight indicates a stronger connection and impact from another cell.

You will be pleased if you have knowledge in digital technique: Our neuron model allows us to copy gates.

NOT-gate:

For this case we just need an input whose weight is -1.

The treshhold may be 0. The effect: Our neuron will fire if and only if the input is 0, because this reaches the threshold. If the input value is a 1, the sum will be -1 and the neuron won't fire, because the sum is below our limit.

AND-gate:

The neuron has two inputs. It shall only fire if both inputs are running. One possibility: We define our treshold to 2 and all weights to 1. As soon as both inputs fire, the sum equals 1*1+1*1 = 2

OR-gate:This is for your practice. The neuron has two inputs. Define weights and threshold (there is more than one solution).

What did this show us?With ANN we are able to copy every circuit. But this is not its purpose. It only demonstrates their abilities.

In fact the research came to a stagnancy in 1969, because it was verified that one neuron can not realise an XOR-function (but it is possible with three neurons). It was said that ANN research is a dead end since neurons can only learn simple logical functions.

Today we know that they are able to do more if we use them the right way.

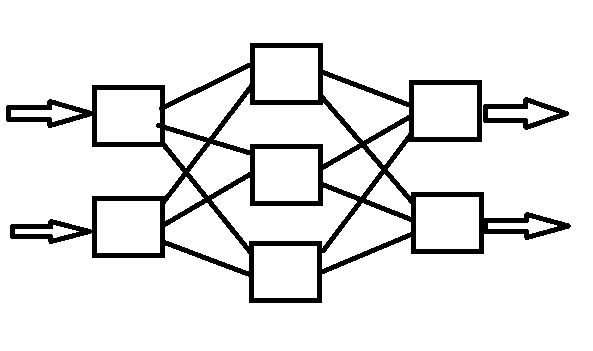

How does a neural network look like?Therefor I want to introduce a frequently used net model: the feedforward neural network. Such a net has clearly defined layers the neurons are arranged.

There is an input and an output layer. There also may be hidden layers between. They are called hidden, because the user is not able to see their values. Each neuron in one layer has connections to each neuron in the next layer. This may look like this:

Task:

Task: Create a net that realizes XOR. As a reminder:

0 xor 0 = 0

0 xor 1 = 1

1 xor 0 = 1

1 xor 1 = 0

For assistance this may be a beginning:

How does an ANN learn? (two examples of learning techniques)1. Supervised Learning

How does an ANN learn? (two examples of learning techniques)1. Supervised LearningYou can teach an ANN by showing it examples of patterns. As already mentioned learning means the modulation of the weights. How the weights are changed is determined by formulas.

If you show a net a pattern, e.g. a picture of one letter (look little net, this is an „A“), the net will compute the discrepancy from the designated output to the real output.

Afterwards the net modulates its weights, so it approaches the designated output. This has to be repeated several times (like we had to repeat learning matter for school).

In this way you can train your net to recognize an „A“ in three different fonts. After this you will discover the fascinating: The net will be able to recognize an „A“ in fonts it never has learned. It knows from your examples, how an „A“ approximately looks like and that is pretty good for character recognition. Of course a net may be mistaken, it isn't accurate. But its fault-tolerance is needed for some tasks (humans are also fault-tolerant and make a lot of mistakes).

2. Unsupervised learningThe net is given some patterns which it shall categorize by itself. You only use this way of learning if you don't know how the result has to be. E.g. you can encode characteristics of animals (Has it a fur? Yes would be 1, no would be 0. There has to be an input for each question that takes the encoded answer) and give them to the net.

After working through all the given animals, the net will arrange those animals closely that have similar characteristics. Closely means that the outputs will look similar, e.g. for the encoded inputs of an horse and a zebra. Instead of this the outputs for a lion and a midge look probably different.

Chapter 2 - practical experienceWhat do I need?We work with neuroph now. You can download this here:

http://neuroph.sourceforge.net/The zip-file contains a Java program called easyNeurons.jar which is for creating, training and testing ANN.

In addition it contains a Java framework that enables you to code your own ANN. I will explain both ways.

How can I use that?You can start easyNeurons.jar with a simple doubleclick.

To use the framework you need to embedd neuroph.jar into your Java project.

E.g. Eclipse:

Create a new project. Rightclick on your project and choose „Properties“. In Java-Build-Path - Libraries click the button „Add External JARs ...“. Choose the file neuroph.jar. That's all.

There are a lot of english tutorials for Neuroph which I recommend to use for further studies. You find them on the neuroph website in Documentation – Tutorials.

1. XOR with easyNeuronsYour task: Create and train a simple ANN, so it can realize the XOR-function.

The menu item "networks" allows you to choose a networktype. At this point we pick the „Multi Layer Perceptron“. The term perceptron has different meanings according to the references and should be used with caution. It can stand for a single neuron whose output is 0 or 1, a layer of parallel neurons or a whole feedforward network with several layers.

This program uses perceptron in the manner of an feedforward network that has two layers (in- and output-layer). Since we want to add hidden layers, we choose the option „Multi Layer Perceptron“.

For the input-layer we declare two neurons, for the output-layer we declare one. The hidden-layer gets four neurons. We apply this in the dialog. The other options are leaved at the presetting (they for instance indicate which mathematical functions are used for learning).

Now we should create a training set – that are pattern examples after which the net will be trained. Click on „Training“ - „New Training Set“. The type is „supervised“. We name the set „XOR“ and give it two inputs and one output. A click on „next“ guides us to a dialog for examples. We just have to put in the XOR-table. Via „Add Row“ we add three more rows:

Input1, Input2, Output1

0, 0, 0

0, 1, 1

1, 0, 1

1, 1, 0

If you are done, click „OK“ and set our XOR trainig set at the top. Clicking the button „Train“ will lead you to the settings for our training program.

The option „Stopping Criteria“ declares when the trainig shall be aborted. This can be done after the error/discrepancy has been reduced to a special limit (max error). It can also happen after a given number of training episodes (how often the examples are shown to the net?). The quality after the training may be varying.

We leave „Max error“ at the presetting. For „Limit max iterations“ we try „2000“.

The second option determines the learning rate. The lower the rate, the less the weights will be changed per iteration and the more slowly our net will learn. Be careful: A higher learning rate is not necessarily better. If it is too high, the net will modify the weights so strong that good solutions are jumped over. Here you need to experiment with it. We take the presettings and click on „Train“.

Now an error graph is shown. It should decrease, otherwise it is not working well. After the training you can test the net with „set input“. Single inputs are seperated by the space character. The outputs won't be perfect, but the rounded ones should be the right solution. Try other settings, experiment with it to get the best results possible.

2. Letter recognition with the neuroph frameworkYou will find several examples from the framework's authors in the zip-file. Search for XorMulitLayerPerceptronSample.java. Try it out. You find this file in the neuroph-folder and this path: sources/neuroph/src/org/neuroph/sample/XorMulitLayerPerceptronSample.java

Your task: Write a program that trains an ANN to recognize letters.

Therefor you need pictures of letters. You can make them with paint. Don't create them too big. 30X30 pixels should be enough. Make sure that all letters are placed in the same area of the picture and have the same size (otherwise your ANN will have problems).

For the beginning you can train a net to determine, wether it sees an „A“ or not. Teach it several fonts and test it with an unknown font.

Afterwards you can expand it to more letters. For instance you may create three letters and a net with three output-neurons. Each neuron would stand for one letter. If neuron 1 fires, the answer may be „it is an 'A'“, if neuron 2 fires, the answer would be „B“ and so on. You determine what the outputs stand for by creating the training set.

Some help to read the images with java:The following program reads an bmp-file into a vector. Black pixels will give the vector a 1 and white pixels a 0.

File file = new File("img/smile.bmp");

BufferedImage img = null;

try {

img = ImageIO.read(file);

} catch (IOException e) {

e.printStackTrace();

}

int[] vector = new int[img.getWidth() * img.getHeight()];

for (int y = 0; y < img.getHeight(); y++) {

for (int x = 0; x < img.getWidth(); x++) {

int rgb = img.getRGB(x, y);

if (rgb == 0xffffffff) { // white pixel

vector[x * y] = 0;

} else {

vector[x * y] = 1;

}

System.out.print(vector[x * y]);

}

System.out.println();

}Displaying the vector as a matrix (as it is given in the program above for testing), you can see the output as a picture. A smiley-picture would look like this:

You may also teach a net to recognize the mood of a smiley, if you want to.

These basics, the sample-neuroph-program and the image-reading, should suffice to accomplish the task. If you have questions anyway you may ask here.

Remark: Neuroph already provides methods to use images as an ANN-input. But I recommend doing this like given above, because this will be better for your understanding how ANN work.

I wish you fun and success with it.

Java frameworks:http://neuroph.sourceforge.net/http://www.heatonresearch.com/encog (also for .Net & Silverlight)

SourcesI study computer science and had this as an optional subject.